Industry Knowledge

A Complete Guide to Outsourcing Video Data Annotation

Video annotation, just like image annotation, is used to empower AI and machine learning models to see and recognize real-world objects. But unlike its static counterpart, collecting video data can be more complex.

Scaling machine learning and data labeling projects have become indispensable in the world of tech. As consumers continue to enjoy their devices’ “eyes”— unlocking phones with their faces, trying on virtual makeup on social apps, or translating menus in a foreign country—there is a need for companies to meet both demand and expectations.

To put things into perspective, the latest estimate for the computer vision market is valued at $48.6 billion1—the key players being social media, banking, and automotive companies. This surge proves that businesses acknowledge the importance of data training in machine learning to stay competitive.

The biggest challenge for video data annotation services is the immense amount of data they need to process to properly power AI models. For a business to launch an AI machine’s full potential, they must first provide it with accurate, consistent, and high-quality data that can minimize performance errors. This is why video data collection and annotation is a crucial first step to launching a successful AI project.

What is video data annotation?

Video data annotation is the process of making objects in videos recognizable by labeling and tagging video data. Both video and image annotations are primarily used to train machine learning and deep learning models. Self-driving cars are just one of its most groundbreaking examples.

Related: (CASE STUDY) Data Tagging for an Autonomous Vehicle Company

Aside from detecting objects in a video, video annotation allows computers to track movement, estimate human poses, and locate an object within its boundaries. With these functions, an autonomous vehicle, for example, can recognize varying objects on the street while it navigates itself through traffic lanes.

Video annotation is more challenging than image annotation because the target objects are always in motion. One of the biggest challenges video annotation services face is the risk of misclassifying objects. To successfully tag video data, the objects are outlined frame by frame, making the process more time-consuming and complex.

One option that can ensure the quality of datasets is human-assisted data-labeling. With human intervention, practical insights can be promptly generated, allowing for gaps and improvements to be quickly addressed.

To put it simply, creating accurate data annotations requires more frames and resources. Video data tagging can be a labor-intensive, expensive, and lengthy process without the right approach.

Different Types of Video Data Annotation Services

Video data can be annotated using either the single frame method or the streaming video method.

To use the single frame method, the annotators would have to extract all the images from the video to annotate the data frame-by-frame. It’s practically just like image annotation, only a lot more time-consuming.

On the other hand, the streaming video method allows the annotators to tag and label objects while they’re in motion, making this the more efficient process of the two.

Depending on the project at hand, there are many ways by which video data annotation can be performed.

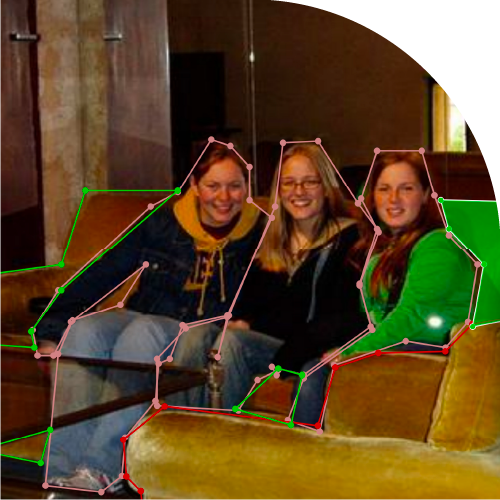

Polygon Lines

Annotation using polygon lines is one of the most flexible methods that can closely identify an object’s real form. This method requires the annotator to create lines using pixels to mark the outer edges of an object.

Bounding Boxes

Bounding box annotation makes use of rectangular boxes to mark each object as closely as possible within their respective frames. This allows machines to determine the objects’ positions using x and y coordinates. It’s one of the most common annotation methods and the least expensive one, as well. But unlike the polygonal annotation method, tagging irregularly shaped objects can be much more challenging with bounding boxes.

Semantic Segmentation

All images are made by thousands of units called pixels. With semantic segmentation or pixel-level segmentation, every single pixel is classified into clusters to determine which object it belongs to. Because it creates a granular understanding of the objects in the image, semantic segmentation is considered one of the most accurate data annotation methods and is commonly applied in consumer-facing creativity tools.

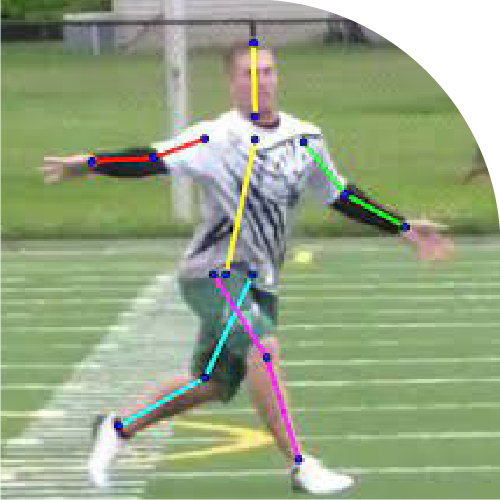

Keypoint Tracking

Keypoint tracking makes use of dots and lines to capture objects in detail. This type of annotation process is usually used to label a human pose, a landmark, or other objects that vary in shape. Keypoint tracking can be used to capture facial features and expressions which can be applied for augmented reality (AR) or virtual reality (VR) animations.

Landmark Annotation

Like keypoint tracking, landmark annotation is also commonly used to recognize facial features or expressions. With landmark annotation, specific parts of an image are tagged, creating highly accurate results that can detect even minuscule features of an object.

Cuboid Annotation

2D or 3D cube annotation is most similar to bounding boxes. It makes use of varying cubes to recognize the length, width, and height of an object. To be more specific, it utilizes anchor points to tag the edges of an object, making the object easily recognizable for AI models.

Why is there a growing need for video data annotation in machine learning?

In recent years, AI has become an indispensable part of key industries and businesses. With the increasing use of computer vision in industries such as healthcare, autonomous vehicles, and media, there is a growing demand for accurately annotating a large amount of raw, unprocessed data that can be used to train machine learning algorithms.

Related: (CASE STUDY) Data Labeling Solutions for Better-Performing Autonomous Driving

How to Choose the Right Video Annotation Partner

Businesses are looking to create various growth opportunities with all the amazing applications video data annotation can be utilized for. Increasing integration and usage of mobile computing platforms and digital transactions for online shopping facilities are currently driving the demand for data collection and labeling.

Cost-effectiveness and scalability are the leading benefits companies can gain from video annotation outsourcing. Aside from looking at your annotation partners’ capabilities, it’s important to consider how their services specifically fit your needs.

Choosing the right video annotation partner is no different from image annotation outsourcing. Here are a few things to consider if you’re preparing for a video annotation project:

- Identify your specific needs

Clearly lay down the goals and needs for your project. Specifying your needs will help you determine the most efficient solutions for the goals you want to achieve.

Pinpoint your biggest requirements by asking your team the right questions:- Why do you want the data to be annotated?

- What are the goals for your project?

- What would you need in terms of timeline, data format, and budget?

- Determine the vendor’s technical capabilities

Narrow down your choices by looking at your potential vendors’ proficiency. How much experience do they have in the same field? Chances for success are higher if they have a solid track record that’s relevant to your specific industry or business.

Check the quality of the tools and technology they use. Are they capable enough to perform video annotation services with speed and precision? - Choose a scalable workforce

When it comes to choosing the right data labeling partner, it’s important to consider your potential vendor’s workforce as much as the tools they use.

Humans are still the best choice when it comes to keeping AIs in check. Serving highly accurate and consistent data is a rigorous task that still requires expert human judgment. That’s why it’s important to have the right people handle the process.

Find out if your potential vendors’ team has the right skills, knowledge, and training required to ensure your project’s success. If safety and security is valuable to your project, you can acquire unbiased and accurate AI results by choosing a vendor who can provide a well-trained workforce from different parts of the world.

Video Data Annotation Projects with TaskUs

With over a decade of outsourcing expertise, TaskUs is the preferred partner for human capital and process expertise for image and video annotation outsourcing. Our accomplished team of data-tagging experts has a proven track record for collecting, annotating, and evaluating large volumes of visual data at scale. Not only do we deliver accurately, consistently, and reliably, we also strive to bring results beyond the given KPIs.

References

We exist to empower people to deliver Ridiculously Good innovation to the world’s best companies.

Services