Industry Knowledge

Generative AI Makes Content Moderation Both Easier and Harder

The internet revolutionized the way we connect with each other and access information, but it’s also created an ideal place for harmful content. And the issues are only getting bigger as technology gets smarter. This sophistication requires an equally sophisticated approach to content moderation.

Deep fakes, for example, are increasingly used in fraudulent schemes and to disseminate false information. Identity security company, Onfido, reports a staggering 3000% increase in deep fake fraud attempts from 2022 to 2023.1 As the opportunities for malice grow, content moderation also requires a significant leap in practices to ensure online safety. Traditional methods, such as keyword filtering, are now inadequate in addressing the challenges.

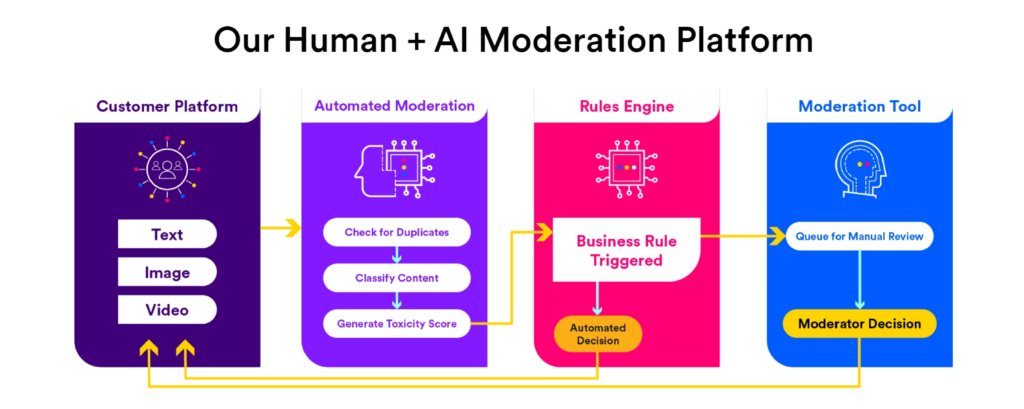

The right answer is using both human expertise and AI-powered moderation. With Generative AI solutions, platforms can swiftly filter large volumes of content, so that human teams can concentrate on more intricate situations that require cultural contexts and nuanced judgment.

The speed of Generative AI with the power of human judgment

Using AI in online content moderation significantly increases review speed. Automated content moderation tools can analyze massive amounts of text, images, and videos in a fraction of the time human moderators require. AI systems also operate 24/7, which help global platforms serving billions of users across different time zones.

However, even with all the perks of AI, human judgment or a “human-in-the-loop” approach is critical. Certain nuances and complex scenarios that demand ethical considerations and an appreciation for cultural contexts can only be discerned by human insight. In instances where subtle perception is needed, AI takes a supporting role, deferring to humans to navigate these gray areas with their unique capabilities for empathy and understanding.

Humans also play a crucial role in identifying and correcting biases in Generative AI. By analyzing flagged content and user feedback, they can refine Generative AI's training data to ensure fairer and more culturally relevant moderation.

But applying Generative AI correctly in content moderation is not an easy process in-house. Businesses should take into account the following:

Bias and fairness: AI models learn from data and biases in training data can lead to skewed content moderation results.

False positives and negatives: AI moderation systems are susceptible to errors. They can incorrectly flag harmless content as problematic or, conversely, overlook content that actually breaches guidelines.

Privacy concerns: Maintaining user trust and safeguarding data security is non-negotiable. Businesses must process sensitive information in compliance with stringent privacy regulations.

Adaptability: Bad actors are constantly finding ways to bypass moderation systems. Generative AI must also continuously adapt and stay current with these tactics to recognize and counter them effectively.

Language model limitations: Large Language Models (LLMs) are mostly trained in English, which means a performance gap for non-English languages.

The challenges in using AI in online content moderation

The improper use of AI in online content moderation can have several direct impacts:

Reduced user trust

Biased or error-prone moderation erodes user trust, leading to decreased engagement and loyalty.

Financial loss

Failure to effectively moderate content can result in legal issues, fines, and advertiser distrust which impacts a company’s bottom line.

Reputational damage

A platform's content affects its reputation. Failure to uphold community safety standards can severely damage a brand’s image.

Outsourcing AI moderation addresses the myriad challenges. The benefits of content moderation start with taking advantage of specialized expertise and advanced technological resources that most businesses don’t possess in-house.

Round-the-clock coverage in various languages and across multiple time zones is easier to accomplish, eliminating the need for an extensive internal workforce. Moreover, outsourcing providers offer scalable solutions that can adjust to fluctuating volumes of user-generated content (UGC), ensuring that moderation quality doesn’t dip during high-traffic periods.

TaskUs: Your content moderation partner

As a leading Trust and Safety service provider, TaskUs developed the Safety Operations Center, an end-to-end content moderation platform. We recognize the strengths and weaknesses of Generative AI and LLM content moderation, so we integrate them within a framework that values human supervision. This way, we ensure content integrity and platform safety in a sophisticated and scalable manner.

Additionally, with a global footprint, TaskUs is well positioned to help train Generative AI and natural language processing models to be just as sharp in other languages as they are in English.

At the same time, we have a team of clinicians and behavioral scientists taking care of our moderators' mental well-being. This commitment to a people-centric strategy not only fosters a healthier environment for our content moderators but also contributes to a more secure online experience for users.

References

We exist to empower people to deliver Ridiculously Good innovation to the world’s best companies.

Services