A Content Moderation Platform that combines the power of AI + Human moderation

By 2022, the internet reached 65% of the world’s population – a staggering 5.2 billion people. The number of users and the volume of uncurated content only continues to grow exponentially – and with this naturally comes an increase in the complexity and frequency of violations, creating ongoing trust and safety challenges for platforms.

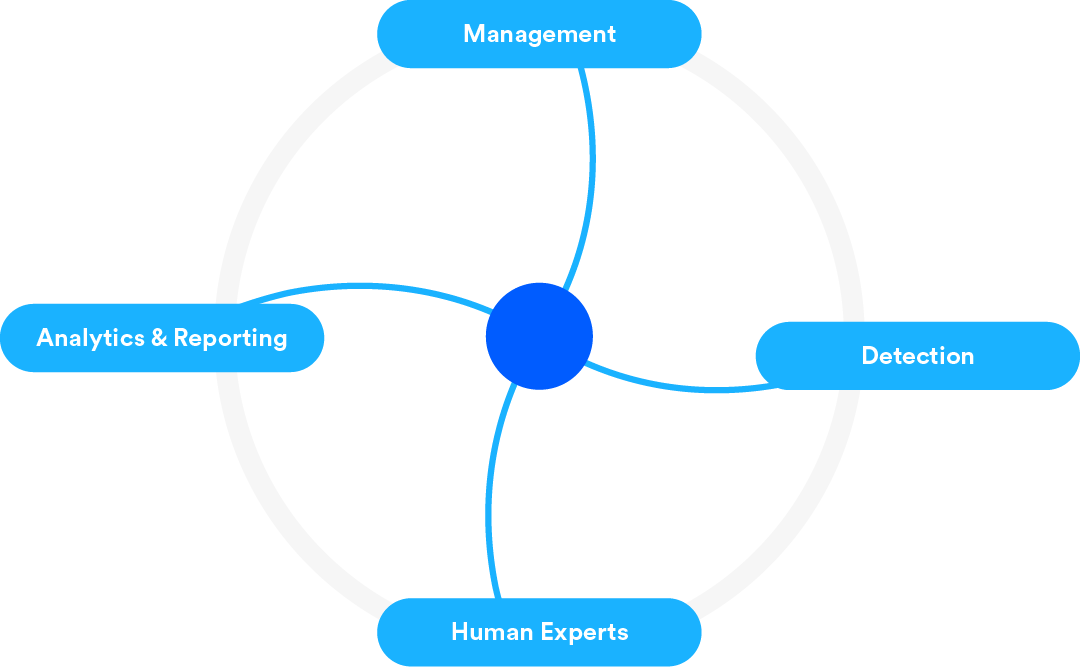

- T&S Policy Configuration

- Cost/Tolerance Dial

- Granular Business Rules

- Toxicity Scorecards

- Content Trends

- Transparency Reporting

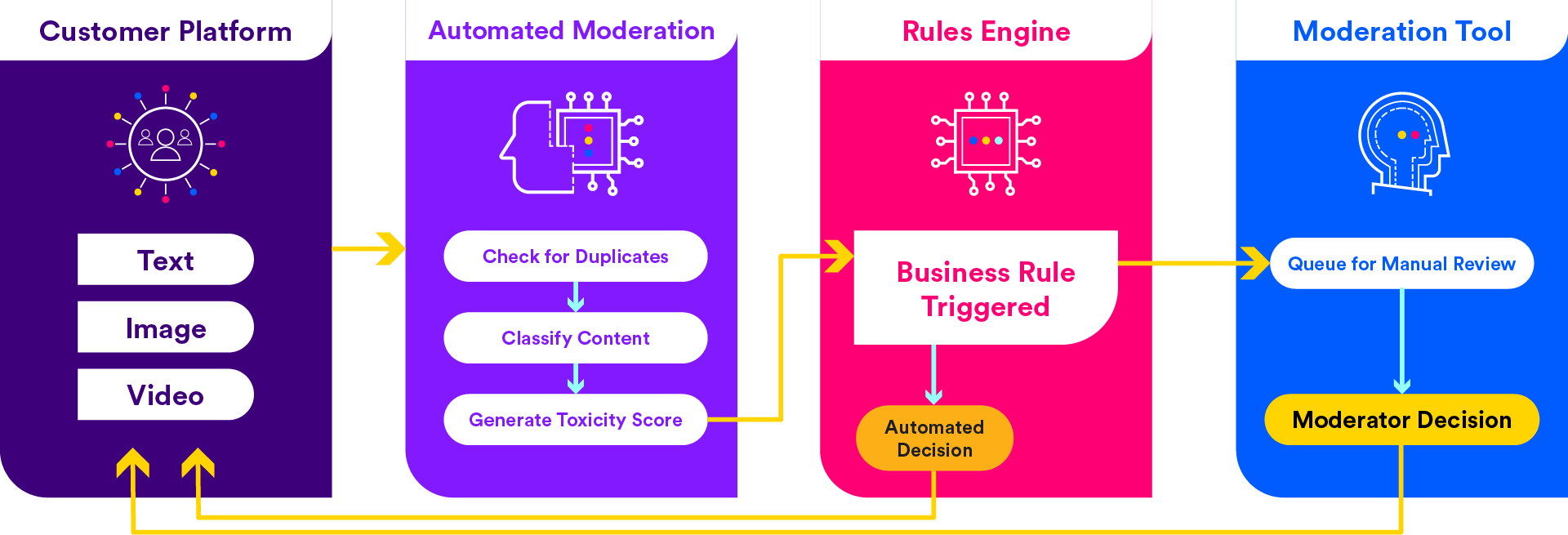

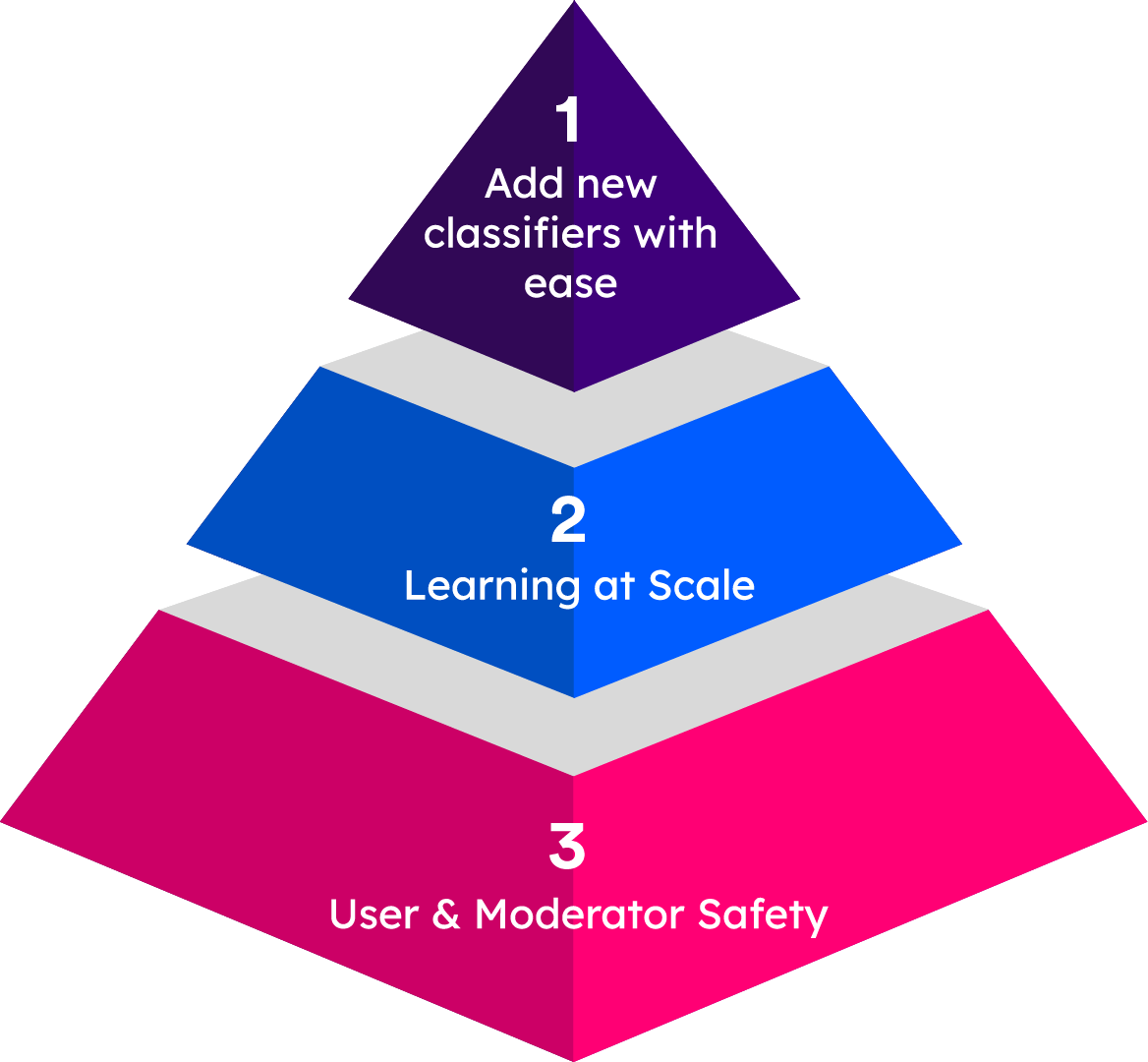

- AI Moderation

- Toxicity Scoring

- Add New Classifiers

- Continuous Improvement

- Moderation

- Data Labelling & Tagging

- Wellness + Resiliency

- Policy Insights

By combining custom and contextual lexicons and overlaying smart translations, we are able to create entirely new, complex language models that deliver F1 scores above 90%. For more specialized languages or issue types, where reliable training data is harder to come by, we can leverage our crowdsourcing platform known as the TaskVerse.

To avoid biases, we continuously test our systems according to Ethical AI principles. We analyze content across multiple platforms for emerging patterns in order to better understand evolving threats online. The result is improved automation accuracy and moderator knowledge to stay on top of toxic trends.

In every country where our moderators operate, we have dedicated clinicians and behavioral health researchers supporting the psychological health and safety of our teams. This intensive layer of support has been proven to improve general wellbeing, reduce attrition and increase operational performance. All of this ultimately leads to a better and safer experience for the users on your platform.

Speak with

an expert

We exist to empower people to deliver Ridiculously Good innovation to the world’s best companies.

Services